RS00 - Start menu

RS12 - Overview of master data locks

RSA0 Content Settings Maintenance

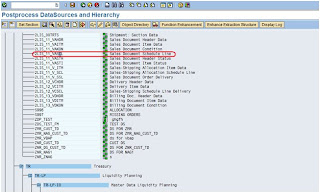

RSA1 BW Administrator Workbench

RSA10 Realtime Test Interface Srce System

RSA11 Calling up AWB with the IC tree

RSA12 Calling up AWB with the IS tree

RSA13 Calling up AWB with the LG tree

RSA14 Calling up AWB with the IO tree

RSA15 Calling up AWB with the ODS tree

RSA1OLD BW Administrator Workbench (old)

RSA2 OLTP Metadata Repository

RSA3 Extractor Checker

RSA5 Install Business Content

RSA6 Maintain DataSources

RSA7 BW Delta Queue Monitor

RSA8 DataSource Repository

RSA9 Transfer Application Components

RSADMIN maintenance

RSADRTC70 TO ADR11 Conversion of table TC70 in ADR11

RSANWB Model the Analysis Process

RSANWB_CRM_ATTR Fill CRM Attributes

RSANWB_EXEC Execute Analysis Process

RSANWB_IMP Calculation of Importance

RSANWB_START_ALL Model the Analysis Process

RSANWB_SURVEY Analysis Process: Create Target

GrpRSAN_CLTV CLTV Modeling

RSARCH_ADMIN BW Archive Administration

RSARFCEX Variant for RSARFCEX

RSASSIBTCH Schedule Assistant in Background

RSATTR Attribute/Hierarchy Realignment Run

RSAWB New AWB

RSAWBSETTINGSDEL Delete user settings of the AWBRSB0 Maintain OLAP authorization objectRSB1 Display authorization objectRSB2 Data Marts Generation Center

RSBBS Maintaining BW Sender-Receiver

RSBBS_WEB Transaction for the RRI in the Web

RSBCTMA_AC xCBL Action Codes

RSBCTMA_DT Mapping of Ext./Int. Document Type

RSBEB Business Explorer Browser

RSBMO2 Open Hub Monitor

RSBO Open Hub Maintenance

RSBOH1 Open Hub Maintenance

RSBOH2 Open Hub Maintenance

RSBOH3 Open Hub Maintenance

RSBO_EXTRACT Auth Check Open Hub Extraction

RSBROWSER BW Browser

RSBWREMOTE Create Warehouse User

RSCATTAWB CATT Admin. Workbench

RSCDS Summarization routine

RSCONCHA Channel conversion

RSCONFAV Favorites Conversion

RSCRMDEBUG Set Debug Options

RSCRMISQ Regis. of Infosets for Target Groups

RSCRMMDX Edit MDX

RSCRMMON Monitor Query Extracts

RSCRMSCEN Regist. Closed-Loop Scenarios

RSCRM_BAPI Test Program for RSCRM Interface

RSCRM_REPORT BW Queries with ODBO (to 2nd 0B)

RSCRT BW Monitor (Near)-Real-Time Loading

RSCR_MAINT_PUBLISH Maint. of Publishing Variables CR/CERSCR_MAINT_URL Maint. of URL Variables for CR/CE

RSCUSTA Maintain BW Settings

RSCUSTA2 ODS Settings

RSCUSTV1 BW Customizing - View 1

RSCUSTV10 BW Customizing - View 10

RSCUSTV11 BW Customizing - View 11

RSCUSTV12 Microsoft Analysis Services

RSCUSTV13 RRI Settings for Web Reporting

RSCUSTV14 OLAP: Cache Parameters

RSCUSTV15 BW Customizing - View 11

RSCUSTV16 BW Reporting

RSCUSTV17 Settings: Currency Translation

RSCUSTV18 DB Connect Settings

RSCUSTV19 InfoSet Settings

RSCUSTV2 BW Customizing - View 2

RSCUSTV3 BW Customizing - View 3

RSCUSTV4 BW Customizing - View 4

RSCUSTV5 BW Customizing - View 5

RSCUSTV6 BW Customizing - View 6

RSCUSTV7 BW Customizing - View 7

RSCUSTV8 BW Customizing - View 8

RSCUSTV9 BW Customizing - View 9

RSD1 Characteristic maintenance

RSD2 Maintenance of key figures

RSD3 Maintenance of units

RSD4 Maintenance of time characteristics

RSD5 Internal: Maint. of Techn. Chars

RSDBC DB connect

RSDB_ADD_ID_2_CRM Create External ID for CRM-GP

RSDB_INIT Initial Download of D&B Data

RSDCUBE Start: InfoCube editing

RSDCUBED Start: InfoCube editing

RSDCUBEM Start: InfoCube editing

RSDDV Maintaining Aggregates

RSDIOBC Start: InfoObject catalog editing

RSDIOBCD Start: InfoObject catalog editing

RSDIOBCM Start: InfoObject catalog editing

RSDL DB Connect - Test Program

RSDMD Master Data Maintenance w.Prev. Sel.

RSDMD_TEST Master Data Test

RSDMPRO Initial Screen: MultiProvider Proc.

RSDMPROD Initial Screen: MultiProvider Proc.

RSDMPROM Initial Screen: MultiProvider Proc.

RSDMWB Data Mining Workbench

RSDODS Initial Screen: ODS Object Processng

RSDODSD Initial Screen: ODS Proces. (Deliv.)

RSDPMDDBSETUP Creates a MOLAP Database in MSAS

RSDPMOLAPDS MOLAP DataSource creation

RSDPRFCDSETUP Create MOLAP Rfc Tests

RSDSD DataSource Documentation

RSDU_SHOWTEMPINCTAB

RSDU_SHOWTEMPINCTABRSDV Validity Slice Maintenance

RSD_ACAT Maintain InfoObject catalog

RSEDIT Old editor

RSEIDOCM Variant for

RSEIDOCMRSENQ Display of Lock Log

RSEOUT00 Variant for

RSEOUT00RSFH Test Transaction Data Extractors

RSFLAT Flat MDXRSFREQUPL Frequent upload from source systems

RSGWLST Accessible Gateways

RSH1 Edit hierarchy initial screen

RSH3 Simulate hierarchies

RSHIER Hierarchy maintenance w/o AdmWB

RSHIERINT Hierarchy maintenance from AdmWB

RSHIERSIM Simulate hierarchies

RSICUBE Maintain/Change InfoCubes (Internal)

RSIMG BW IMGRSIMPCUR Load Exchange Rates from File

RSINPUT Manual Data Entry

RSIR_DELTATRACK KPro Delta Tracking

RSISET Maintain InfoSets

RSKC Maintaining the Permittd Extra Chars

RSLDAPSYNC_USER LDAP Synchronization of Users

RSLGMP Maintain

RSLOGSYSMAPRSMD Extractor Checker

RSMDCNVEXIT Conversn to Consistent Intern. Vals

RSMDEXITON Activate Conversion Routine

RSMO Data Load Monitor Start

RSMON BW Administrator Workbench

RSMONCOLOR Traffic light color in the Monitor

RSMONITOR_DB D&B Integration

RSMONMAIL Mail Addresses for Monitor Assistant

RSNPGTEST Test Network Plan Control

RSNPGTEST2 Test Network Plan Control

RSNSPACE BW Namespace Maintenance

RSO2 Oltp Metadata Repository

RSO3 Set Up Deltas for Master Data

RSOCONTENT Administration of a Content System

RSOCOPY Copy from TLOGO Objects

RSODADMIN Administration BW Document Managemt.

RSOR BW Metadata Repository

RSORBCT BI Business Content Transfer

RSORMDR BW Metadata Repository

RSPC Process Chain Maintenance

RSPC1 Process Chain Display

RSPCM Monitor daily process chains

RSPFPAR Display profile parameter

RSQ02 Maintain InfoSets

RSQ10 SAP Query: Role Administration

RSQ11 InfoSet Query: Web reporting

RSRAJ Starts a Reporting Agent Job

RSRAM Reporting Agent Monitor

RSRAPS Manages Page Store

RSRCACHE OLAP: Cache Monitor

RSRCATTTRACE Catt transaction for trace tool

RSREP BW Administrator Workbench

RSRFCCHK RFC destinations with logon data

RSRHIERARCHYVIRT Maintain Virtual Time Hierarchies

RSRQ Data Load Monitor for a Request

RSRR_WEB Report-Report Interface in Web

RSRT Start of the report monitor

RSRT1 Start of the Report Monitor

RSRT2 Start of the Report Monitor

RSRTRACE Set trace configuration

RSRTRACETEST Trace tool configuration

RSRV Analysis and Repair of BW Objects

RSRVALT Analysis of the BW objects

RSR_TRACE Trace Monitor

RSR_WEB_VARIABLES Variable Entry in Web

RSSCD100_PFCG Change Docs for Role Administration

RSSCD100_PFCG_USER for Role Assignment

RSSCM_APPL Application settings SCM4.0 and BW

RSSD Access for scheduler

RSSE Selection start InfoCube

RSSGPCLA Maintain program class

RSSG_BROWSER Simple Data Browser

RSSM Authorizations for Reporting

RSSMQ Start Query with User

RSSMTRACE Reporting Log Authorization

RSSTARTMON Starting the monitor in parall.proc.

RSSU53 Display authorization check BW

RST22 Old Short-Dump Overview

RSTB Choose Object Name

RSTBHIST Table history

RSTG_BUPA Target Group Sel. Business Partners

RSTG_CUST Target Group Selection Customers

RSTG_DB Target Group Selection D&B

RSTG_DB_WEB Target Group Selection D&B

RSTPRFC Create Destination for After-Import

RSU0 Update rules overview

RSU1 Create update rules

RSU1I Create update rules

RSU1O Create Update Rules

RSU2 Change update rules

RSU2I Change update rules

RSU2O Change Update Rules

RSU3 Display update rules

RSU3I Display update rules

RSU3O Display Update Rules

RSU6 Delete update rules

RSU6I Delete update rules

RSU6O Delete update rules

RSU7 Data Extraction: Maintain Parameters

RSUSR003 Check standard user passwords

RSUSR200 List of Users per Login Date

RSWELOGD Delete Event Trace

RSWEWWDHMSHOW Display Background Job SWWERRERSWEWWDHSHOW Display Work Item Deadline Monitorng

RSWWCLEAR Execute Work Item Clearing Work

RSWWCOND Execute Work Item Rule Monitoring

RSWWDHEX ExecuteWorkItemDeadlineMonitoring

RSWWERRE Start

RSWWERRERSZC Copying Queries between InfoCubes

RSZDELETE Deletion of query objects

RSZT Get Test Component

RSZTESTFB Shortcut Function Test Environment

RSZV Call up of view V_RSZGLOBV

RSZVERSION Set frontend version

RS_AWB_REMOTE Remote AWB Staging

RS_BCT_BWBEOTYP Maintain BW Backend Object Types

RS_DS_CHECK Check consistency request

RS_ISTD_REMOTE Maintain InfoSource

RS_LOGSYS_CHECK Source System ToolRS_PE

RS_ACTIVATE Activation of BEx Personalization

RS_PERS_BOD_ACTIVATE Activate BEx Open Pers.

RS_PERS_BOD_DEACTIVA Deactivate Pers. for BEx Open

RS_PERS_VAR_ACTIVATE Activate Variable Pers.

RS_PERS_VAR_DEACTIVA Deactivate Pers. for Variables

RS_PERS_WTE_ACTIVATE Activate Web Template Pers.

RS_PERS_WTE_DEACTIVA Deactivate Pers. for Web Template

SP01 Spool

Thanks,

Chindam Praveen Kumar,

Blue Marlin Systems,

www.bluemarlinsys.com/bi