The following are the most commonly used methods for Business Objects Xcelsius Connectivity:

- Query as a Web Service.

- Live Office.

- SAP Netweaver BI Connector.

Query as a Web Service (QAAWS) is a Business Objects web service. This Web service is capable of accessing ONLY Business Objects universes to extract data. Whenever a dashboard is executed, a real-time query is executed against the actual data which takes time depending on the amount of data requested for the dashboard. It is not a very effective solution and those who use it should have highly summarized tables in their data warehouse to use this feature and to do this, it consumes a lot of efforts. The advantage of using this method is the securities issue which already a part of BO user IDs and real-time data retrieval whenever required. The disadvantage of this method is that bulk data such as more than 500 records returned by the web service will slow down the performance of the dashboard considerably. Moreover there is no staging for data fetched in the Xcelsius spreadsheet. Hence QAAWS can be used whenever:

- The response time from the data source is a small value.

- The data source provides data that can be readily consumed by the Dashboard.

- Real time data or live data is to be used in the dashboard.

- The query result set is very small and limited like a standard table.

- Multiple query result sets are to be placed in the same range of the spreadsheet.

- Multiple Universes are involved for the data required by the dashboard.

- Universe contains and is capable of implementing all the required logic.

- There is need to document and manage queries in QAAWS client.

- Portability is required as QAAWS can more easily be ported to other applications as well.

Live Office can be used to access Business Objects Web Intelligence (WEBI) or Crystal reports as a source of data. Moreover it can leverage the functionality of these reporting tools to summarize and format the data. The advantage is that the reports can be scheduled and presented using Business Objects InfoView and the latest report can be used by the Xcelsius Dashboard to pick the data thus avoiding the waiting time. The Live Office Connectors allow you to bind multiple queries (views) to a single connector resulting in less enterprise connections that finally increases the level of a parallelism in the query execution. Live Office physically presents the query output in the Excel workbook of the dashboard. The disadvantage is that the user has to logon into Business Objects every time they need the access the dashboard and most companies do not prefer this method as it does not support single sign-on. Moreover this does not work when used outside the network. There are many instances where network connections are broken and reports are not being refreshed. The dashboard performance is also affected when the extracted data is huge, as the data is stored in the Xcelsius spreadsheet. So whenever historical or detailed data has to be presented, it affects the performance. Finally Live Office can be used whenever:

- The Query response from the data source is expected to be too long.

- Lots of data processing is involved like ranking, grouping, synchronization of queries etc.

- There is need to use WEBI / Crystal Report features like formulas, variables and cross tabs.

- Additional logic needs to be added in the intermediary layer that is the report layer.

- Bursted reports or scheduled reports can be used as data sources without hitting database.

- SAP is used as the data source, since crystal reports can directly access R/3 as well as BI.

- Data from multiple reports needs to be displayed on the dashboard.

- Simplicity is required as Live office is displayed as simple Excel Toolbar.

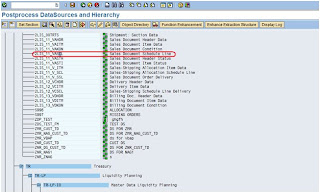

SAP Netweaver BI Connector is a process of Integrating SAP Data based on a BI Query with Business Objects Xcelsius Dashboards. Based on this integration the BI dashboards are presented and the reports are defined based on the user's requirement The BI query will be acting as the data source in which the BI Query will consume data from any of the BI data targets ( DSO, Infocube, Extractor, Infoset etc). It involves the following steps:

- Designing the BI Query.

- Configurations on the Xcelsius Connectivity.

- Designing the Xcelsius Canvas and Mapping process for the Components.

- Presenting as Xcelsius Dashboard.

Uday Kumar P,

uday_p@bmsmail.com

Blue Marlin Systems Inc.,

www.bluemarlinsys.com/bi/info/bipapers.phpBlue Marlin Systems Inc.,